mltype - Typing practice for programmers

If you clicked on this post hoping you would learn something about static typing, type annotations or similar, this is NOT the right article. The typing I talk about in this post is the thing you do with you keyboard. Or to be precise

The action or skill of writing something by means of a typewriter or computer.

A few months ago I decided to learn touch typing! I know what you are thinking… “Are you a faster typist than before and was all the pain worth it?” I would definitely say yes and yes. However, the internet is full of similar before and after testimonials and I am not going to write yet another one.

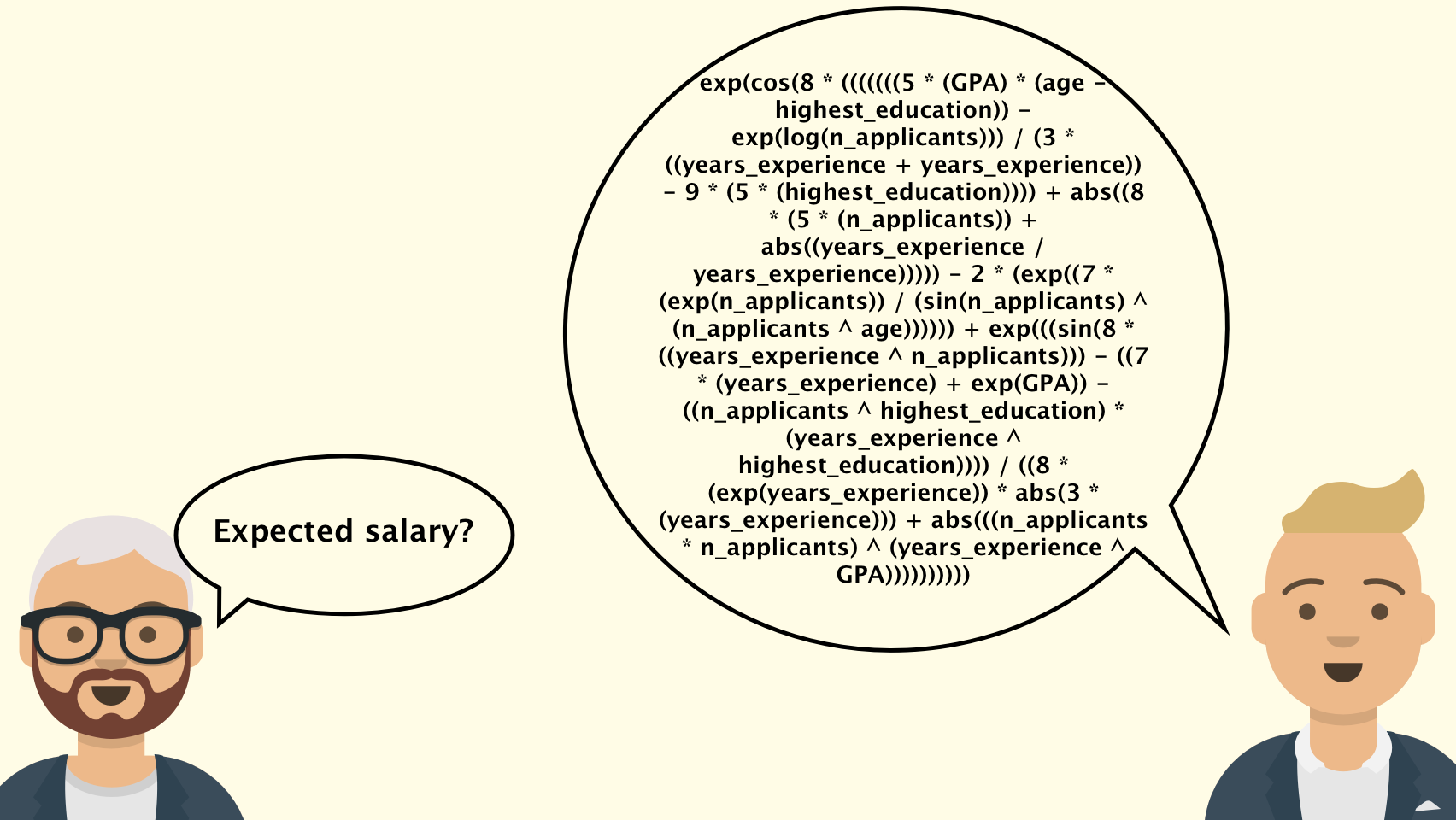

What I want to talk about is that I was really surprised how few resources there are for practising touch typing with programming languages. After a quick google search you will probably discover the following sites:

While the above websites have multiple strong points, let me point out some of their shortcomings

- Lack of variability and element of surprise

- Manual selection of source files and corresponding lines

- Not customizable

- Not free (typing.com)

- Not nerdy enough - would it not be possible to do it in the terminal?

For the above mentioned reasons, I decided to give it a shot and write my own typing practice software: mltype.

In short, it is a command line tool (written in Python). It uses neural networks to generate text that looks like a programming language (or normal language). Additionally, it provides non-machine learning functionalities like reading text from a file or standard input.

If you wonder what kind of “neural network” is behind it I would more than encourage you to (re)read the The Unreasonable Effectiveness of Recurrent Neural Networks by Andrej Karpathy. mltype is doing more or less the same thing in the background. To be precise, there is a character-level language model. It spits out a probability distribution over the next character given previous characters. Most importantly, it tries to hide all the complexity and boring details of the training and inference from the user. Generating text from an existing model and training a new model can both be done in a single command.

Below are some examples of different programming languages. All the models that generated them and many other pretrained models are available for download (see the README.md on github).

C++

Go

Python

If you want to know more and try it out yourself visit the below links!

Leave a Comment