DeepDow - Portfolio optimization with deep learning

Introduction

DeepDow is a Python package that focuses on neural networks that are able to perform asset allocation in a single forward pass.

Single forward pass?

Portfolio optimization is traditionally a two step procedure

- Creation of beliefs about the future performance of securities

- Finding optimal portfolio given these beliefs

One notorious example of the two step procedure (inspired by Markowitz) is

- Estimation of expected returns \(\mu\) and covariance matrix $\boldsymbol{\Sigma}$

- Solving a convex optimization problem, e.g. $\boldsymbol{\mu}^T \textbf{w} - \gamma \textbf{w}^T \boldsymbol{\Sigma} \textbf{w}$ such that $\textbf{w} > 0$ and ${\bf 1}^T \textbf{w}=1$.

Commonly, these two steps are absolutely separated since they require different approaches and different software.

deepdow absolutely abandons this paradigm. It strives to merge the above mentioned two steps into one. The fundamental idea is to construct end-to-end deep networks that input the rawest features (returns, volumes, …) and output asset allocation. This approach has multiple benefits:

- Hyperparameters can be turned into trainable weights (i.e. 𝛾 in 2nd stage)

- Leveraging deep learning to extract useful features for allocation, no need for technical indicators

- Single loss function

Core ideas

Financial timeseries can be seen a 3D tensor with the following dimensions

- time

- asset

- indicator/channel

To give a specific example, one can investigate daily (time dimension) open price returns, close price returns and volumes (channel dimension) of multiple NASDAQ stocks (asset dimension). Graphically, one can imagine

By fixing a time step (representing now), we can split our tensor into 3 disjoint subtensors

Firstly, x represents all the knowledge about the past and present. The second tensor g represents information contained in the immediate future that we cannot use to make investment decisions. Finally, y is the future evolution of the market. One can now move along the time dimension and apply the same decomposition at every time step. This method of generating a dataset is called the rolling window.

Now we focus on a special type of neural networks that input x and return a single weight allocation w over all assets such that $\sum_{i} w_{i} = 1$. In other words, given our past knowledge x we construct a portfolio w that we buy right away and hold for horizon time steps. Let F be some neural network with parameters 𝜃, the below image represents the high level prediction pipeline.

The last piece of the puzzle is definition of the loss function. In the most general terms, the per sample loss L is any function that inputs w and y and outputs a real number. However, in most cases we first compute the portfolio returns r over each time step in the horizon and then apply some summarization function S like mean, standard deviation, etc.

Network layers

Ok, but how do we construct such networks? Rather than hardcoding a single architecture deepdow implements powerful building blocks (layers) that the user can put together himself. The two most important groups of layers are

- Transformation layers: The goal is to extract features from the inputs

- RNN

- 1D convolutions

- Time warping

- …

- Allocation layers: Given input tensors they output a valid asset allocation

- Differentiable convex optimization (

cvxpylayers) i.e. Markowitz - Softmax (sparse and constrained variants as well)

- Clustering based allocators

- …

- Differentiable convex optimization (

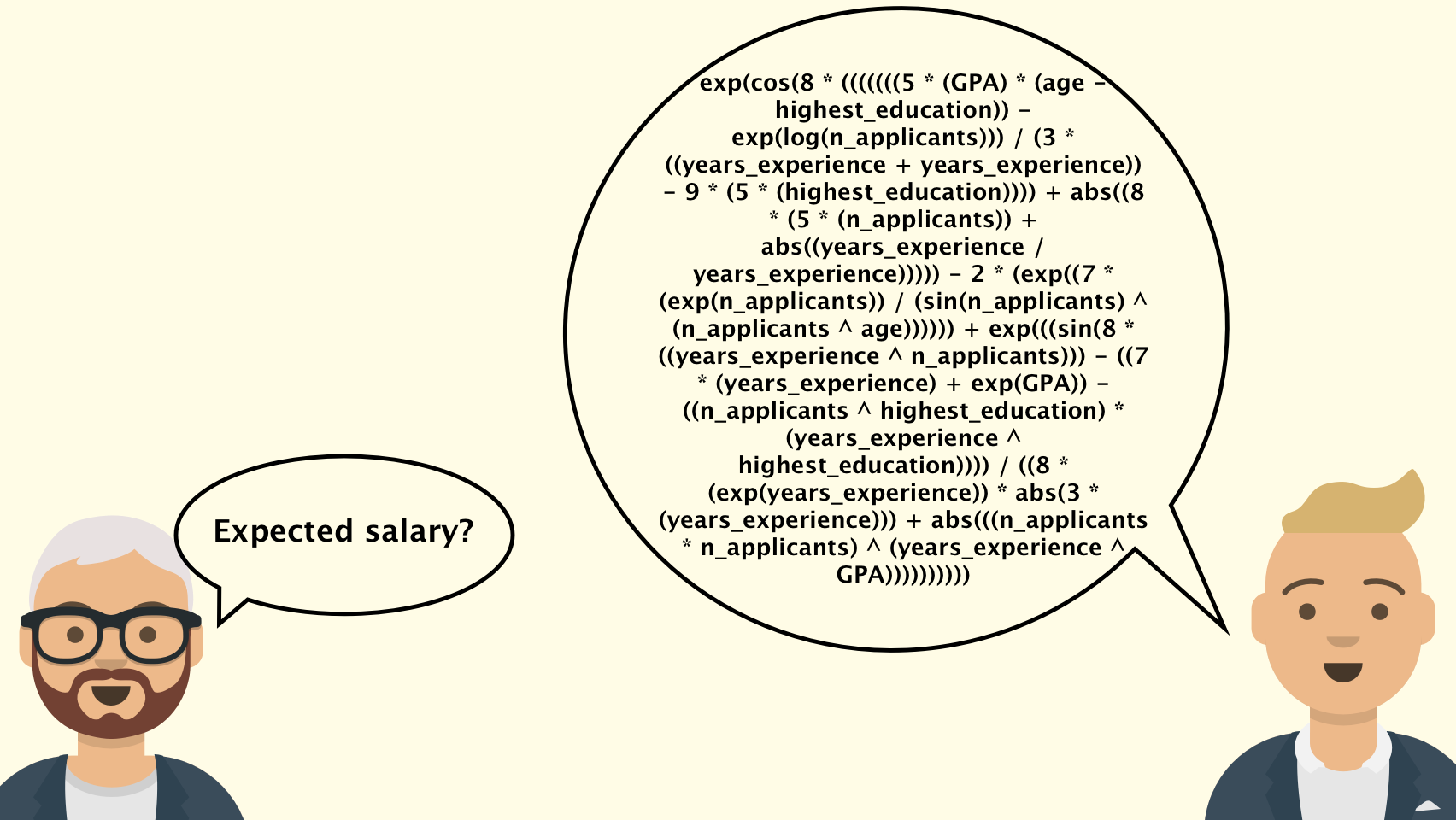

The general pattern is to create a pipeline of multiple transformation layers followed by a single allocation layer at the end. Note that almost any real valued hyperparameter can be turned into a learnable parameter or even predicted by some subnetwork.

Want to know more

If this article sparked your interest, feel free to check the below links.

I will be more than happy to answer any questions. Additionally, constructive criticism or help with development is much welcomed.

Leave a Comment